What should be done about online harassment is a question that has troubled online platforms and human rights advocates for some time. Where should the line between free speech and harassment be drawn, and who should draw it? As this question goes unanswered, harassment continues to happens everyday, across multiple platforms, and around the world.

Over the past few years, consensus has emerged amongst human rights defenders that targeted online harassment constitutes a form of silencing, in that it often leads to self-censorship. A recent study from the Data and Society Research Institute and the Center for Innovative Public Health Research (CIPHR) demonstrated that online harassment has real-life consequences; 43 percent of harassment victims report having changed their contact information following an incident, while 26 percent stopped using social media, their phone, or the internet altogether.

A recent study from the Data and Society Research Institute and the Center for Innovative Public Health Research (CIPHR) demonstrated that online harassment has real-life consequences; 43 percent of harassment victims report having changed their contact information following an incident, while 26 percent stopped using social media, their phone, or the internet altogether.

Although incidents in the global south tend to receive less publicity than those in the United States and Europe, online harassment is a truly global phenomenon. As a report on "cyber violence" from the UN Broadband Commission for Digital Development Working Group on Broadband and Gender states, online crimes "seamlessly follow the spread of the Internet", with new technologies turning into new tools of harassment in places of high adoption. For example, a 2017 study from Pakistan's Digital Rights Foundation found that 40 percent of women in the country had experienced online sexual harassment, with much of it occurring on Facebook.

This phenomenon is particularly stark when it comes to Twitter. Over the past few years, a number of prominent users, particularly women, have departed the platform after experiencing harassment. One notable example is that of Lindy West, an American feminist writer and fat acceptance activist, who publicly declared her exit from the platform, calling it "unusable for anyone but trolls, robots and dictators." Twitter, wrote West, "for the past five years, has been a machine where I put in unpaid work and tension headaches come out … I [share information] pro bono and, in return, I am micromanaged in real time by strangers; neo-Nazis mine my personal life for vulnerabilities to exploit; and men enjoy unfettered, direct access to my brain so they can inform me, for the thousandth time, that they would gladly rape me if I weren't so fat."

West's experience is echoed in the data presented in the paper by Data and Society and CIPHR. For West, a well-known writer, leaving Twitter may create a loss in opportunities and connections, but could be a worthwhile trade-off. But for many users, social media is a lifeline, and leaving isn't so simple.

When the platforms on which such harassment takes place are corporate and American, the solutions to mitigating its harm can seem few. Regulation is possible, but difficult; the United States Constitution is broad in its protections of speech and does not cover everything that users experience as harassment. Furthermore, harassment is often already against the law; the problem therefore lies in enforcement, not regulation.

Regulation is possible, but difficult; the United States Constitution is broad in its protections of speech and does not cover everything that users experience as harassment. Furthermore, harassment is often already against the law; the problem therefore lies in enforcement, not regulation.

As such, many advocates have looked toward corporate online platforms—or, intermediaries—for solutions. These platforms have the freedom to restrict content as they see fit, be it nudity, violence, or harassment. Most of the popular social media companies—including Facebook, Twitter, and YouTube —have long had policies in place that prohibit harassment, but many users have complained that the companies do not go far enough toward enforcing their rules.

Enforcement, as we will see, is also not always a straightforward task. Companies invest significantly in their reporting and takedown mechanisms, but continue to receive complaints. This next section will explore why moderating content is such a difficult undertaking.

The challenges of content moderation

Content moderation is a profoundly human decision-making process about what constitutes appropriate speech in the public domain. - Kate Crawford

The management of harassment—through content reporting, or flagging tools and punitive consequences—varies significantly from one platform to another. The architecture of a given platform has a lot to do with the variances; a social media platform like Twitter where most content is text-based has different needs than a multimedia platform like Facebook.

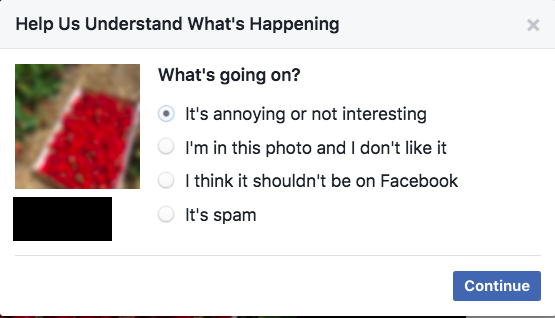

Content moderation works similarly across platforms. When a user sees a piece of content they do not like, they can flag the content for review, triggering a series of questions. For example, when a user flags written content on Facebook, they are prompted to "help us understand what's happening" by answering the question "what's going on?" and selecting from a list of options that includes "I think it shouldn'''t be on Facebook." Selecting that option results in another prompt— "What's wrong with this?"—followed by various options including "It's rude, vulgar, or uses bad language" and "It's harassment or hate speech." Each option prompts a different result; reporting content as "rude" leads to a page describing options for blocking the user, while reporting it as harassment or hate speech results in the content being reported for review.

Friction is built into flagging mechanisms to help avoid malicious or unwarranted reports, but this can also be frustrating for users who are experiencing harassment. Furthermore, malicious reporting—reporting content for the purpose of harassing its creator—remains common. In 2014, more than forty Vietnamese journalists and activists found that their Facebook accounts had been deactivated after they were targeted by organised reporting campaigns coordinated by government supporters. Although Facebook denies that mass reporting leads to account deactivation, there are numerous ways that reports can trigger shutdowns—for example, if a user is reported for using a fake name and doesn't feel comfortable submitting their ID upon request from the company.

In 2014, Twitter partnered with Women Action and he Media (WAM!) in an effort to improve their approach to tackle harassment. For about one month, WAM! was given special reporter status by Twitter to receive, analyse, and escalate (as necessary) reports of harassment to Twitter for special attention. WAM! later produced a report based on their experience that included data on the types of reports they received as well as recommendations for Twitter.

WAM! found a number of issues with Twitter's reporting process, of which malicious reporting was one of the identified issues. One major issue that they discovered was the fact that evidence on the platform is often ephemeral; harassers often delete their tweets, making it difficult for the company to act on reports.

"If Twitter, Facebook or Google wanted to stop their users from receiving online harassment, they could do it tomorrow," Jessica Valenti argued in the Guardian in 2014. Valenti refers to YouTube's Content ID program—which scans the platform's videos to identify copyrighted content—as an example of companies' willingness and ability to vigilantly monitor content.

Content ID works well because uploaded videos are scanned and compared against an existing database of files submitted by content owners. A content creator who posts their works on YouTube—and most social media platforms—can flag content as unauthorised to submit it for review. The content is then (in the case of YouTube) scanned against a database, or reviewed by human moderators.

Moderating harassment is not as simple. While images or videos could theoretically be moderated in a similar way to copyrighted content, text presents a particular challenge for companies. Fair content moderation requires a human review process—that is, workers that read flagged messages and determine whether or not they violate a given set of rules. As The Guardian's Facebook Files -a set of internal moderation documents leaked in May 2017- demonstrate, however, commercial content moderators are often underpaid, and moreover, report experiencing psychological issues as a result of their work. These -as well as the overall reduction in cost- are some of the reasons that Silicon Valley companies are currently pushing for increased use of AI in content moderation.

But while AI algorithms can understand simple reading comprehension problems, they struggle with basic tasks, "such as capturing meaning in children's books," indicating that the technology is not yet ready to be used for moderating more sophisticated speech. In other words, AI may mistake satire or other commentary for harassment. Furthermore, although harassment can come in the form of videos or images, much of it is in the form of text, which cannot just be scanned against a database, even if one existed. Hateful messages, once flagged by a user, need to go through a human review process.

Journalist Sarah Jeong, in response to Valenti's argument, wrote: "She's technically right that they could stop harassment with 100% effectiveness, in that if you cease to receive all communications you cease to receive harassment. The question is, is it possible for blunt algorithmic instruments to be effective without being too broad?" On that question, the jury is still out.

Content moderation is a complex process, made more difficult by the opacity with which most social media companies operate. While pushing social media companies to handle harassment concerns and complaints is a worthwhile endeavour, the expectation that companies will be able to solve the problem at hand is unrealistic; given the number of users and harassment complaints that arise each day, it seems an impossible task to manage at scale.

Platform solutionism: Who should be responsible for tackling harassment?

Beyond the question of whether platforms can be relied upon to tackle harassment lies the question of whether they ought to be. Writers like Valenti see companies as having a primary responsibility to manage online harassment, a position that is not without challenges.

Beyond the question of whether platforms can be relied upon to tackle harassment lies the question of whether they ought to be.

Technological solutionism, a term popularized by Evgeny Morozov, is the idea that, "given the right code, algorithms and robots, technology can solve all of mankind's problems, effectively making life "frictionless" and trouble-free." Platform solutionism, it follows, is the idea that platforms like Facebook and Twitter can effectively solve the problems of harassment, terrorism, hate speech, and the like through policies and user tools.

Proponents of platform solutionism may see corporate policy changes and enforcement as a means to an end, a best available fix for a pervasive and difficult problem. They are unlikely to actually view platform solutions as the best solutions, but rather the best available—but often fail to take into account the political and speech implications of relying on corporations to police the Internet.

On the other hand, there are those who see relying on platforms to police harassment and other societal ills as politically problematic, taking away power from traditional institutions of governance and placing it in the hands of corporations. Critics of platform solutionism may view the existing censorship occurring on social media platforms as evidence of their inability to effectively identify unlawful (or unpermitted) speech.

Writer and activist Soraya Chemaly argues, "There is no one organization or institution responsible for solving the problem of 'online harassment'." Chemaly's work? assessment—that harassment is a social problem that requires comprehensive social solutions—does not negate the responsibility that social media platforms have toward their users, but instead seeks a holistic approach involving education, platform policing, legal reform, and greater engagement with law enforcement.

Indeed, content moderation alone will not solve the pervasive problem of online harassment, but should be viewed as merely one piece of a broader approach. At the same time, proponents of greater platform policing should be careful in placing too much trust in corporations, whose ultimate driver is their bottom line and not the best interests of their users.

Jillian C. York is a writer and activist who examines the impact of technology on our societal and cultural values. She writes, speaks and organizes so that everyone can enjoy unhindered access to their digital rights. She is the Director for International Freedom of Expression at the Electronic Frontier Foundation and a fellow at the Center for Internet & Human Rights at the European University Viadrina.

The views expressed in this article are the author's own and do not necessarily reflect Tactical Tech's editorial stance.

Enjoyed reading this article? Check out Onlinecensorship.org, an award-winning project that seeks to encourage companies to operate with greater transparency and accountability toward their users as they make decisions that regulate speech.